A definitive guide (for marketers, developers and everyday users) on what web scraping is and how to use it.

Web scraping is a useful tool for harvesting data from websites that don't offer an application programming interface (API). Marketers, web developers and ordinary users can employ it to save time and automate everyday tasks.

Let’s talk more about what web scraping is, why it’s so popular and the controversy surrounding it.

What Is Web Scraping?

Web scraping involves using a computer program, script or bot to impersonate a human user, download web pages and parse through the contents to look for specific information.

This technology makes it possible to pull data from a large number of sites — or many pages on a single site — in a short amount of time. Scrapers can also save that information to a database for future use.

Web scraping, also called screen scraping or data harvesting, is not a new practice — or even an unusual one. The first search engine, Archie, used a primitive form of scraping back in 1990, crawling links on the web and making an index of the files found.

Bandwidth limitations meant Archie didn't parse the contents of the files, but its successors did. In 1993, JumpStation published indexes that included the titles and headers of pages, and in 1994, Lycos launched and began cataloging page contents in full.

In the 2020s, our web might be bigger, but scraping tools are more widely available. Anyone can harvest data from hundreds (or thousands) of pages at the push of a button.

Why Use Web Scraping?

Web scraping has many uses, including:

- Collecting email addresses and other contact details

- Gathering prices and product information

- Watching for sporting results

- Observing a page for changes

In the early days of the web, people used scrapers to create rudimentary price comparison sites or deal dingers. Some marketers used them to harvest webmasters' emails and build email marketing lists.

Today, there’s less need for web scraping because the digital marketing space has matured. Services like PriceAPI make it simple to create the same types of comparison sites without scraping.

Using web scrapers to gather contact details, however? Still commonplace.

Julie Arden wrote for Hackernoon that when "collecting email addresses from the web, professional scrapers usually parse data from social networks (LinkedIn, Facebook, etc.) or forums.” For legal entities, however, the information comes from the organizations’ official websites.

The primary advantage of scrapers, added Arden, is that they work very fast. “One can find a hundred addresses in a couple of minutes." In comparison, it would take a person days — maybe weeks — to visit a thousand web pages one by one and find contact information.

However, the practice of web scraping is often frowned upon, as it’s sometimes misused. When companies can quickly build giant contact lists — sometimes with people who did not consent to receive communications — it opens the floodgates for spam.

Related Article:5 Ways to Generate More Loyalty and Email Signups

Web Scraping for Individuals

Web scraping isn’t limited to companies collecting data. Individuals can also use it to monitor websites and scan for recent updates.

For instance, say you want to buy a ticket to a highly anticipated concert or snag the year’s hottest Christmas gift for your kid. You could use a web scraping browser extension, like Check4Change, to monitor the page. When there’s an update, you’ll receive a notification.

Scraping is also helpful for academics. A researcher could download all news articles or discussion posts related to a specific topic, archive them and search at their leisure.

Is Scraping Data From Websites Legal?

Web scraping is a controversial topic — and a confusing one, from a legal point of view.

According to Arezou Rezai of UK-based law firm Paris Smith, "There is no specific law prohibiting scraping." However, some companies have sued developers who’ve used scrapers, with mixed results.

Lawmakers must consider several points when deciding if scraping is legal, including:

- Was the data harvested protected under copyright law?

- Was the data collection activity forbidden under the site’s terms and conditions?

Rezai explained that in the 2013 case of NLA (The Newspaper Licensing Agency) vs. Meltwater, a media monitoring company, the Supreme Court ruled in favor of the NLA. It stated that Meltwater's use of news headlines was enough to amount to copyright infringement because creating headlines required creative input.

In contrast, in the 2015 case of airliner Ryanair vs. PR Aviation, an airline and air travel information provider, the Court of Justice of the European Union ruled that Ryanair didn't have intellectual property rights to the scraped data, which was flight times and prices.

US Treats Scrapers More Favorably

Web scraping cases are complex, and laws vary internationally. In 2019, networking platform LinkedIn accused analytics company HiQ of scraping its user profiles, saying it was a violation of the site's terms and services that amounted to hacking and, as such, violated the Computer Fraud and Abuse Act (CFAA).

The US Ninth Circuit of Appeals, however, ruled in favor of HiQ, saying that harvesting data that is publicly accessible online is not a CFAA violation.

Data analyst Tom Waterman explained, "The decision was a historic moment in the data privacy and data regulation era. It showed that any data that is publicly available and not copyrighted is fair game for web crawlers."

LinkedIn attempted to appeal the decision, with the case reaching the US Supreme Court in 2021. However, the case was ultimately sent back to the Ninth Circuit, which upheld its original verdict.

Still, these rulings focused on data harvesting rather than how that data is used. Waterman stressed, "The decision does not grant HiQ or other web crawlers the freedom to use data for unlimited purposes."

For instance, one startup, Clearview AI, is facing lawsuits from several tech giants— including Facebook, Instagram and Venmo— for scraping user profile photos for use in facial recognition software, sparking privacy concerns. Yet it's still unclear how the courts will rule in the matter.

Learning Opportunities

Related Article:6 US States With Pending Consumer Data Privacy Legislation

Seek Advice Before Performing Large-Scale Scraping

All in all, website scraping is still a legal gray area, and would-be scrapers should consider seeking legal advice before taking action.

Plus, Rezai explained, "If the data being scraped includes personal data, then compliance with data protection law must also be borne in mind."

If the plan is to gather large amounts of data for commercial use, he suggested getting consent first, saying, "The only way to be truly certain that the rights of a website owner have not been infringed is to obtain their express consent to the screen scraping and subsequent use of the information."

Popular Web Scraping Tools

Gathering data from websites is relatively easy, even for less technical users, thanks to robotic process automation tools, such as:

- Atomic Lead Extractor: for gathering phone numbers

- Email Extractor: for collecting email addresses

- Web Scraper: for dynamic data extraction

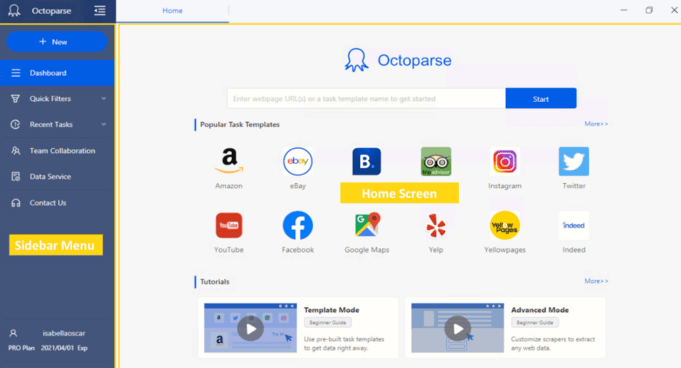

- Octoparse: for no-code, configurable web scraping

The tools above don’t require coding knowledge and, as long as you’re looking to extract data in a predictable format, will get the job done quickly.

How to Create Your Own Website Scraper

Some people, like developers and marketers, might have more complex data needs. For instance, maybe the websites they’re trying to scrape have blockers in place. Or perhaps the way the information is displayed isn’t readable to rudimentary scraping scripts.

In these instances, building a custom scraper makes sense. And one of the most popular tools to do so is Beautiful Soup, a library for the Python programming language. You can use Beautiful Soup to download and parse HTML and XML files. By itself, it can read simple web pages.

If you need to interact with elements on a website, complete forms, follow redirects or store cookies, the MechanicalSoup library may come in handy.

MechanicalSoup impersonates a browser. Developers can set up user-agent parameters, and many developers set their bot's user agent to match that of a popular browser to reduce the risk of requests being denied by the server.

One common user agent is:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36

Using the above string of text tells the browser that your bot is a recent copy of Chrome running on Windows 10. The server is typically more willing to answer requests from a browser it thinks is a normal user rather than an unknown Python script.

Both Beautiful Soup and MechanicalSoup can be installed using pip, a package installer for Python.

Tips for Making a Safe Scraper

Because of the controversy surrounding website scrapers, it's important for developers making such tools to think carefully about how they work and to treat the websites they parse with respect.

Consider the following tips for developing a safe tool:

- Use the site's own API if it’s available

- Limit the number of requests made per minute to reduce bandwidth waste

- Save data as you go to prevent repeat requests if the script crashes

- Limit the number of connections the script opens at once

- Avoid using proxies or VPNs to bypass restrictions put in place by the server

- Respect the site's robots.txt instructions

- Give the website credit if you share data gathered via scraping

Empirical Data, a data science consulting firm, recommended that developers identify themselves to webmasters by providing their contact details in their scraper bot's user-agent parameters.

“The website’s administrator may notice some unusual traffic happening. Manners come first, so let them know who you are, your intentions and how to contact you for more questions."

This advice contradicts the tip of making your bot look like a standard web browser. However, it’s a smart move for developers looking to scrape significant amounts of data.

Related Articles:Automating Customer Data Imports Can Benefit Businesses

How to Develop a More Powerful Scraper

Some websites are so complex that even a custom tool built with Beautiful Soup and Mechanical Soup won’t help. In those instances, a browser automation framework, like Selenium or Scrapy, could be the best option.

Kevin Sahin, co-founder of Scraping Bee, explained that Selenium specifically is useful for:

- Scrolling web pages

- Clicking on buttons

- Taking screenshots

- Completing forms

- Executing JavaScript code

If a developer needs to interact with a Single Page Application or something that relies heavily on JavaScript, automating the interactions through Selenium makes the job much easier.

Selenium is supported by several programming languages, including Python. The tool can be used with Chrome, Firefox and Safari.

Developers can run their browser of choice in headful mode and watch as their script interacts with the browser. Alternatively, the script can run the browser in headless mode, which consumes fewer resources.

Selenium can be installed using pip. Sahin recommended that developers install and use the library in a virtual environment for added security.

The Bottom Line

Web scraping, despite its grey areas, offers a lot of practical uses. If developed right, a scraper can help organizations and individuals alike collect and analyze information faster.

Still, anyone looking to parse through websites in this way must be mindful of what data they collect, how they collect it and what they do with it afterward.